Learning Objectives:

After reading this article, you will be able to:

(1) define culture of safety in the health care setting, (2) explain the role of "shame and blame" and authority gradient as historical barriers to safety culture, and (3) apply the three core principles of building a culture of safety.

Download Slides: PPT

Case Study

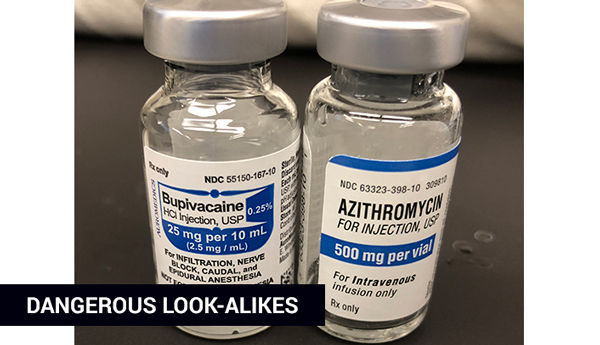

Anesthesiologist Candice Morrissey was called in to a scheduled C-section for a healthy young mother whose labor was not progressing. As she had done countless times before, Candice picked a vial out of the Azithromycin container to quickly get antibiotics into the patient before surgery. This time, however, she noticed that the medication, while looking similar to azithromycin, was in fact bupivacaine—a powerful anesthetic. If Candice gave the anesthetic at the antibiotic dose, it would send the young patient into cardiac arrest. What happened here?

What is a safety culture?

Safety culture refers to the ways that safety issues are addressed in a workplace. It often reflects “the attitudes, beliefs, perceptions and values that employees share in relation to safety.”1 The health care workplace is recognized as a high-risk, error-prone environment. Medical errors account for approximately 250,000 deaths per year in the United States, the third-leading cause of death behind heart disease and cancer.2

Figure 1. Dangerous look-alikes inspired improvement. Antibiotic packaging has been changed and is now stored in a different location.

The above case study details a real, very scary near-miss—a safety error that was caught before anything bad happened. Candice filed a safety event report (referred to as “RL” at U of U Health) and has since worked with patient safety and quality improvement pharmacist Shantel Mullin to change the process for delivery of azithromycin.

A history of "shame and blame"

Acknowledging and addressing medical errors has been hampered by medicine’s historical “shame and blame” culture, which frequently applies individual blame to errors instead of identifying and addressing system-based improvements. In 2013, only half of physicians in training thought that errors were handled appropriately at their institution, and nearly a third thought they would be criticized for making mistakes.3

The future: Empower and improve

One goal of patient safety is to create a culture committed to addressing concerns, respecting all involved parties, and creating an environment where people are comfortable drawing attention to medical errors.

It starts with three core principles of building a safety culture:

- Stuff happens. Acknowledge and report errors—we all make mistakes.

- No-blame. Support a no-blame culture by speaking up and encourage others to raise concerns.

- Continuously improve. Commit to process-driven learning and prevention.

In the opening case study, Candice and her team clearly exhibit all three core principles of safety culture: Candice first acknowledged the problem and reported it, no one was “blamed” for the incidence, and she worked with a team to improve the process to prevent future error.

How to build a culture of safety

Improve communication

Much of the literature on safety culture comes from other high-risk environments such as aviation and nuclear energy. Significantly, NASA studied the errors that occurred in aviation and found that up to 70% of them involved communication errors. In those industries, an authority gradient, which is very pronounced in medicine, can also contribute to poor communication and errors. The encouraging aspect is that a focus on communication and teamwork can have positive effects on patient care, as illustrated in a 2010 JAMA study that showed a reduction in mortality compared to usual care (18% vs. 7%).4

Engage the team

As a team member, you can help to maintain open lines of communication and encourage others to raise concerns if they see potential harm. A simple method for addressing this is to respond to pages, calls, consults, and concerns in a timely and respectful manner that encourages further communication.

As a team leader, you can acknowledge the inherent authority gradient that exists in medicine and take time to ensure that all team members’ concerns are addressed. A pre-procedure “time out” process done correctly is an excellent example of proactive patient safety teamwork. As a team leader, you can also create a culture that encourages communication by highlighting safe practices, reviewing errors, learning from those errors, and avoiding blame.

Have a process for event reporting

All medical professionals witness events that can harm patients or have the potential to harm patients. A cornerstone of safety culture is reporting these events and near-misses so that they can be evaluated and addressed. Following a 1974 TWA crash that resulted in 92 deaths, the FAA created the Aviation Safety Reporting System, which requires reporting of events, including near-misses. Since its implementation, aviation fatalities have decreased tenfold.

Every facility has a mechanism for reporting errors or near-misses. These reports can be centrally evaluated to examine trends and cross-unit, cross-service, and system-wide issues that need to be addressed. The goal is to address these issues without blame, reinforcing a culture of continuous improvement where we learn from errors. Unfortunately, one of the limitations of reporting systems is their passive nature; in addition, they can be prone to underreporting. You can address this by reporting errors or near-misses that you witness.

Conclusion

Safety culture reflects “the attitudes, beliefs, perceptions and values that employees share in relation to safety.” Assigning blame is a barrier to building a culture of safety. We know we're thriving when we communicate effectively, engage our teams, and consistantly report safety events. And it all starts with frontline individuals and teams (1) acknowledging that errors exist and reporting them, (2) promoting a positive and supportive no-blame environment, and (3) embracing continuous improvement to empower individuals and teams to improve local processes and prevent future errors.

References

- To Err Is Human: Building a Safer Health System (Institute of Medicine 1999 | 3 Minutes) It’s been nearly 20 years since IOM (now NAM, National Academy of Medicine) released this landmark study. Read it to gain important historical perspective.

- Medical Error — The Third Leading Cause of Death in the US (BMJ 2016 | 5 Minutes) Medical error is not included on death certificates or in rankings of cause of death. Martin Makary and Michael Daniel assess its contribution to mortality and call for better reporting.

- Human error: models and management (BMJ 2013 | 6 Minutes) Durani et al suggest taking into account the subtle differences in attitudes toward patient safety found among junior doctors of different grades and specialties.

- Association between implementation of a medical team training program and surgical mortality (JAMA 2010 | 13 Minutes) The Veterans Health Administration implemented a formalized medical team training program for operating room personnel to determine whether a focus on communication and teamwork could impact surgical outcomes.

This article was originally published July 2018.

Candice Morrissey

Peter Yarbrough

Medical errors often occur due to system failure, not human failure. Hospitalist Kencee Graves helps explain why we need to evaluate medical error from a system standpoint.

Your gut tells you a process could be better than it is—how do you back that feeling up with hard data? Senior value engineer Luca Boi shows how undertaking a baseline analysis can jumpstart your improvement project.

Many people ask, “What am I supposed to report?” or “Does this count?” Hospitalist Ryan Murphy explains the basic vocabulary of patient safety event reporting, informing the way we recognize harm and identify and report threats to safety.