Learning Objectives:

After reading this article, you will be able to:

(1) identify medical errors that result from multiple levels of system failure

(2) examine a medical error from the standpoint of a system, rather than an individual

(3) recognize that humans are prone to error; thus, systems must be designed to minimize human error.

Download Slides: PPT

Case Study

A middle-aged man with sepsis from a diabetic foot wound was admitted to a rural Utah hospital. It was clear the patient needed to be transferred to University of Utah Health for specialty care. Though the patient was accepted to U of U Health’s medicine service at 4:00 PM, he didn’t arrive until 2:00 AM now in severe pain. When the patient arrived, the accepting nurse could not reach a provider to see the patient or place orders. The patient went several hours without pain medications or antibiotics and became sicker. What happened here?

Human Error vs. System Error

constitutes a medical error. An error is defined as the failure of a planned action to be completed as intended, or the use of a wrong plan to achieve an aim.1

Historically, errors in medicine were thought to be caused by a failure on the part of individual providers. In contrast, a systems approach to medical error assumes that most errors result from human failings in the context of a poorly designed system. For example, when seen as a system, a wrong-site surgery performed by a physician who was up all night on trauma call is viewed as the result of the system that failed to protect a patient and provider from error due to fatigue. Without reviewing this as a system, it could be seen as the fault of an individual fatigued provider.2

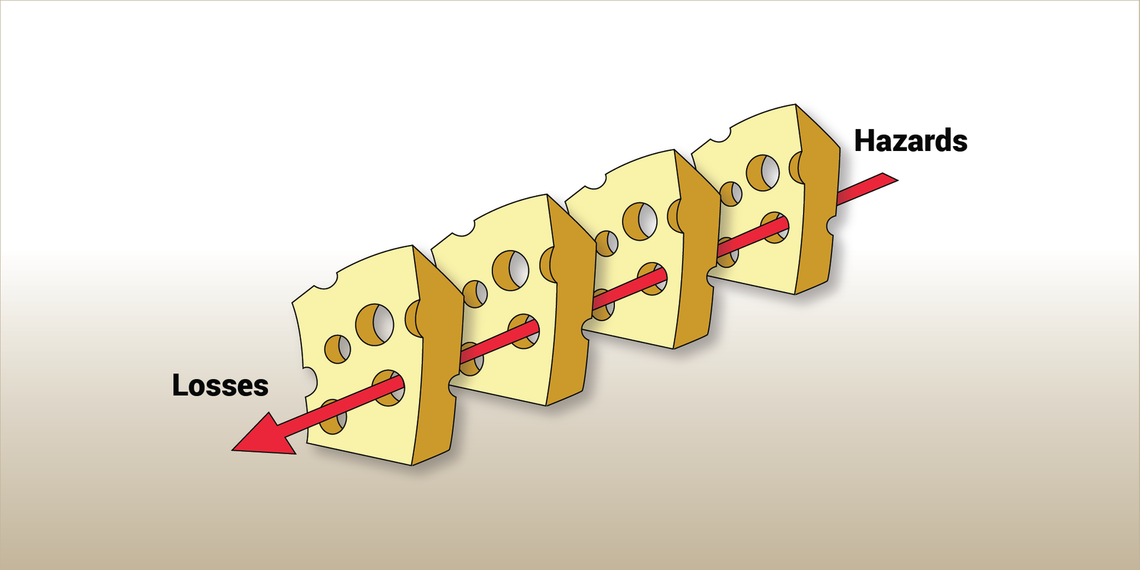

As described by psychologist Dr. James Reason, the defensive layers provided by systems resemble layers of Swiss cheese—except the holes in the cheese are continually changing (Figure 1). The presence of holes in one “slice” does not cause a bad outcome; rather, when the holes in multiple layers of protection line up, harm can come to victims.3

Figure 1. Dr. Reason's "Swiss Cheese Model" for error. Errors occur when holes exist in many layers of system defenses.

The impact of system failures

A systems approach to error aims to identify situations or factors that can lead to human error, then work to improve the underlying systems to minimize the likelihood of error or the impact of error.2 As Dr. Reason said, “We cannot change the human condition, but we can change the conditions under which humans work.”3

What went wrong in this case?

Returning to our case, this patient arrived at U of U Health for specialty physician consultation, but he went hours without the treatment he needed because the nurse struggled to find the provider responsible for his care. This error (failure of the planned action to be completed as intended) resulted from multiple failures, not just one error by one provider. Reviewing errors using a systems perspective can lead to improvements that reduce the likelihood of a similar event occurring again.

Dispel the shame and blame

The people who delivered the care are often best suited to discuss the steps that led to the outcome. Nursing assistants, nurses, residents, physician assistants, nurse practitioners, therapists, and supervising physicians all play a key role in understanding system breakdowns and identifying solutions that prevent future error.

What to do after error occurs

Understand that medical errors are common and can happen to anyone. When a medical error occurs, it is critical to submit an event report and provide your perspective on where the system broke down. Use this as an opportunity to identify vulnerabilities in the system and improve processes to prevent a future event.

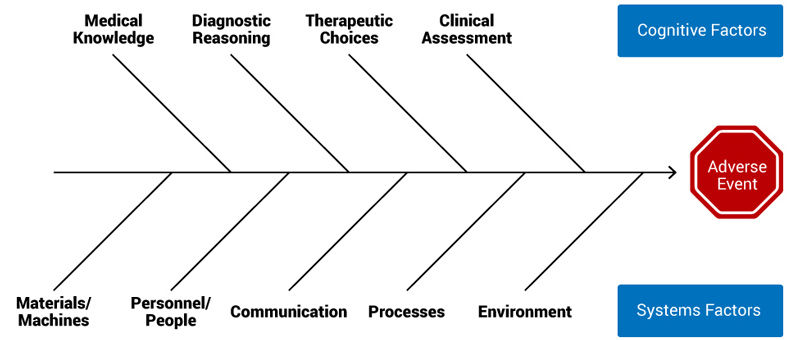

One method for reviewing a medical error is a Modified Fishbone Diagram (Figure 2):

Figure 2. Modified fishbone diagram. Courtesy of the University of Colorado Morbidity and Mortality Steering Committee.

It is critical to incorporate the perspective of everyone involved to complete the Fishbone Diagram. When conducting a case review, invite people from all roles and levels of experience to help discuss the medical error.

Conclusion

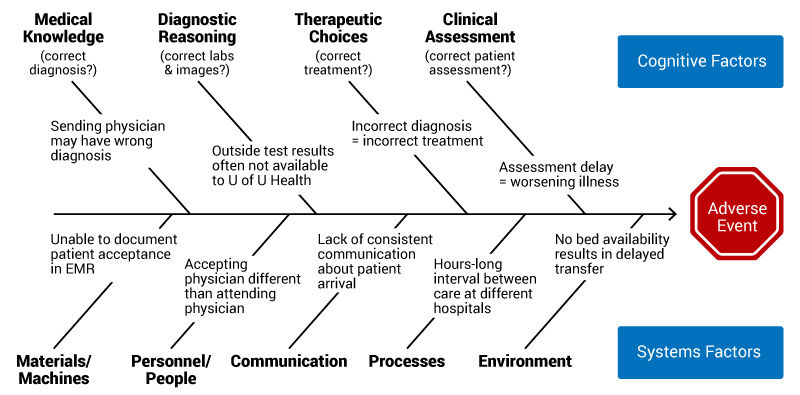

Returning to the case outlined above, we included the transfer center team, the patient placement team, physicians, medicine unit nurses, and internal medicine residents in our systems review of the medical error. In reviewing the error—a patient arriving to our hospital as a transfer, then experiencing a delay in care—we realized that a consistent workflow didn’t exist for knowing which medical teams accepted patients into University Hospital and communicating the arrival of patients to the correct providers.

Figure 3. Modified fishbone diagram of transfer process.

The Fishbone Diagram above (Figure 3) helped highlight where our system broke down. We developed a new transfer workflow and mapped our process. We also developed an External Transfer Tip Sheet to better communicate our process with external providers. Discussing this case and addressing these errors from a systems perspective allowed us to improve this process.

Learn by doing: Get involved in system safety

U of U Health Safety Learning System (SLS) is a review team that examines every fatality that occurs in our hospital using a systems-based approach—and we can always use help. If you’d like to get involved, physicians, APCs and nurses from all specialties are encouraged to participate. These reviews are robust, gathering input from everyone on the care team. To learn more, please contact me directly.

References

- AHRQ Archive. Chapter 1. Understanding Medical Errors (AHRQ | Accessed 30 May 2018) An excellent (and super comprehensive) overview of medical errors.

- AHRQ PSNet Patient Safety Primer: Systems Approach (AHRQ | Accessed 29 May 2018) An expanded version of the content provided in this post, along with a case study, more on Dr. James Reason, and a systems approach to analyzing error.

- Human error: models and management (BMJ 2000 | 7 Minutes) Excellent read from Dr. James Reason (the Swiss cheese model maker) that somehow manages to combine Chernobyl, mosquitos, US Navy, and more to tackle error in health care.

Kencee Graves

The practice of medicine is recognized as a high-risk, error-prone environment. Anesthesiologist Candice Morrissey and internist and hospitalist Peter Yarbrough help us understand the importance of building a supportive, no-blame culture of safety.

A missed diagnosis can delay treatment or result in inappropriate treatment, causing unnecessary pain, suffering, and often financial hardship for our patients. Internist and hospitalist Peter Yarbrough helps explain why diagnostic errors happen with strategies to prevent them.

EBP, or evidence-based practice, is a term we encounter frequently in today’s health care environment. But what does it really mean for the health care provider? College of Nursing interim dean Barbara Wilson and Nurse manager Gigi Austria explain how to integrate EBP into all aspects of patient care.