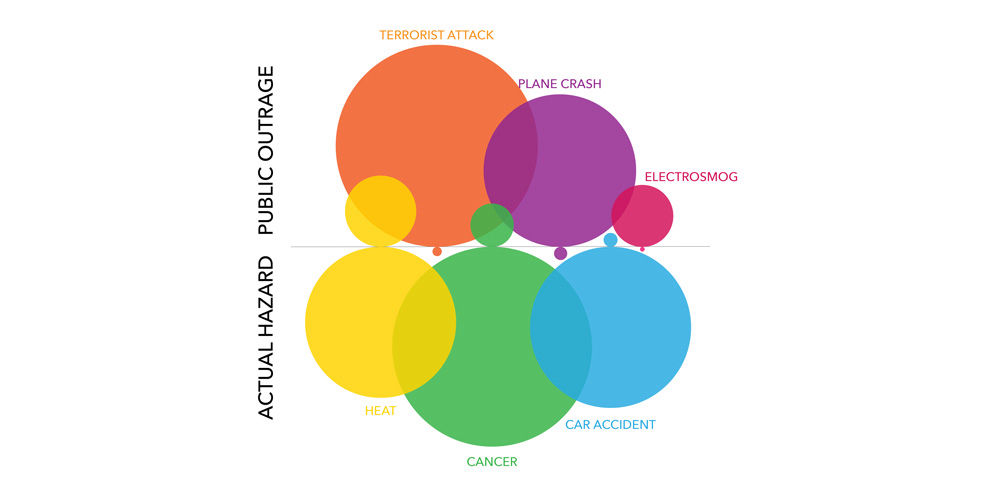

hy do we underestimate risk? This phenomenon isn’t unique to politics or natural disasters, and it contradicts our need to prepare for future events. It explains why it’s hard to get healthy “millennial invincibles” to sign up for health insurance, why earthquake insurance policies skyrocket after an earthquake, and why people tend to underinvest for retirement. Two separate biases—availability and recency—explain why we make medical decisions that follow this perception of risk.

Availability Bias—You’re more likely to think of the risks you know.

Behavioral economists refer to this phenomenon as availability—we assess the likelihood of a negative event based on how readily examples come to our minds. In other words, we believe a familiar risk is more likely to occur than an unfamiliar one. Patients often focus more on a terrible but uncommon illness if they know someone who has had it before. Physicians often refer to a familiar diagnosis when assessing new patients.

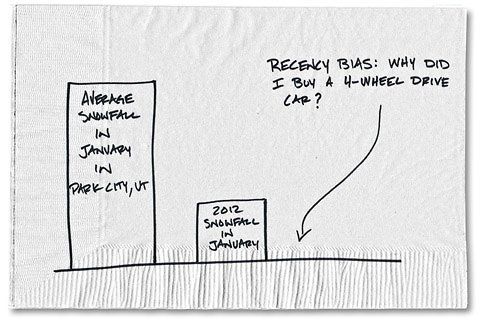

Recency Bias—You’re more likely to consider risks you just experienced.

This is illustrated by recency. Many years ago, I saw Michael (not his real name), a healthy gentleman in his 30s. His symptoms of a typical upper respiratory infection masked a rare cause of hearing loss. He ended up losing most of his hearing in one ear, and I felt terrible for missing it, although it’s unlikely that anyone would have recognized the underlying condition in the setting of his most prominent symptoms.

In the year following this incident, any time a patient presented with Michael’s ubiquitous viral cold symptoms, I worried about missing hearing loss. As more time has passed, I am now less likely to think of it. Maybe it was good that it was on my differential diagnosis, but the odds of seeing this again in my career is extremely low. Was I correct in being hyper-vigilant about such a rare condition? Or is this just availability rearing its confusing head? As physicians, we oftentimes can’t distinguish.

Recognizing these biases, particularly in medicine, can significantly influence lives. The pioneering behavior economist Daniel Kahneman said, “Maintaining one’s vigilance against biases is a chore—but the chance to avoid a costly mistake is sometimes worth the effort.”

Two ways to avoid these biases: Data-driven decisions and teamwork

How can we do this? Luckily, we have built-in tools that help us remain vigilant:

#1 Consistent use of diagnostic and treatment guidelines

Evidence-based medical intervention paired with the physician’s experience provides reliable care for the individual patient. Objectivity doesn’t diminish our clinical experience—it helps us remain vigilant.

#2 Lean on your team

Medical providers are not infallible, and that means we need to seek out and accept the help required to do what is best for the patient. That means saying, “I don’t know.” That means asking for help. That means delegating. That means constant self-assessment and improvement. That means working as a team.

Kyle Bradford Jones

To celebrate the New Year, Value Engineer Mitch Cannon applied statistics to weight loss. He was quickly reminded of an important lesson that applies in health care: when you’re trying to improve, don’t overreact to data.

It’s part 2 of 4 in our series on process mapping. This post is about the reasons to build a process map. They’re inexpensive and so very often bear fruit for your effort.

It’s the third consecutive post in the Dojo’s summer of process mapping. Today I discuss 4 common facilitation issues LSS practitioners can avoid prior to, and during a mapping effort.